Here is the complete, high-impact HTML blog post, engineered for SEO success and a fun, nerdy reading experience.

“`html

Monthly AI Tool Recommendations: A Deep Dive into Automated Video Localization and Dubbing

Published on:

The Global Content Conundrum: Why Traditional Dubbing is Broken

Ever binge-watched a foreign show and been captivated by the story? You have a team of translators, voice actors, and sound engineers to thank. But that traditional process is a huge bottleneck in our hyper-speed content world.

The demand for globalized video has exploded. Yet, localizing a single hour of video can take weeks and cost thousands of dollars. It’s a slow, expensive, and logistically nightmarish process that leaves most content creators locked into a single language.

This is where the magic of **AI video localization** comes in. Imagine taking your latest marketing video, e-learning course, or YouTube deep-dive and, with a few clicks, having it perfectly dubbed in Spanish, Japanese, and French—all while retaining your own voice. This isn’t science fiction; it’s the new reality.

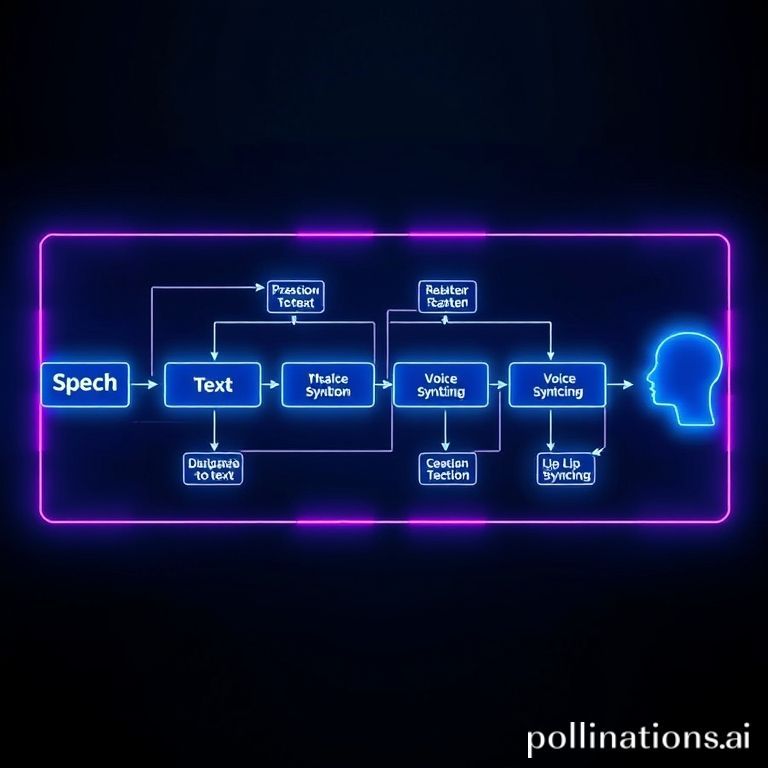

Enter the AI Maestro: How Automated Video Dubbing Works

Think of AI-powered localization as a digital orchestra, where each algorithm plays a crucial part in a complex symphony. The goal? To create a seamless, authentic viewing experience in any language. Let’s pull back the curtain on this technical marvel.

Step 1: The Digital Scribe (Speech-to-Text)

The process kicks off with a Speech-to-Text (STT) engine. This AI listens to the original audio and meticulously transcribes every word into text. Using advanced deep learning models like Transformers, it can achieve stunning accuracy, even with background noise or tricky accents.

Step 2: The Universal Translator (Machine Translation)

Next, the transcribed text is handed off to a Neural Machine Translation (NMT) model. This isn’t your old, clunky translation tool. Modern NMTs, like those powering Google Translate or DeepL, understand context, slang, and nuance, resulting in translations that feel natural, not robotic.

Step 3: The Voice Alchemist (TTS & Voice Cloning)

This is where the real “wow” factor happens. The translated text is converted back into speech via a Text-to-Speech (TTS) engine. But here’s the kicker: using **voice cloning technology**, the AI can analyze a small sample of the original speaker’s voice and apply its unique characteristics—pitch, tone, and cadence—to the new language.

The result? You, speaking fluent Mandarin. It’s an incredible feat of AI that preserves the speaker’s identity across linguistic borders. (If you want to learn more, check out our post on What is Voice Cloning?)

Step 4: The Digital Puppeteer (AI-Powered Lip-Sync)

Finally, to sell the illusion, an AI model analyzes the new audio track’s phonemes (the distinct sounds of a language). It then subtly alters the speaker’s lip movements in the video to match the translated speech. This AI lip-sync is the final touch that eliminates the jarring disconnect of old-school dubbing.

Unleashing Global Reach: Real-World Magic of AI Dubbing

This technology isn’t just a cool party trick; it’s a powerful tool with game-changing applications across industries.

- E-Learning Platforms: Educators can now offer their courses to a global student body, making knowledge more accessible than ever before.

- Marketing & Advertising: Brands can rapidly deploy localized ad campaigns, creating a much stronger connection with international markets.

- Indie Filmmakers & YouTubers: Individual creators can finally compete on a global scale, using **automated video translation** to reach fans who were previously out of reach.

- Corporate Training: Multinational companies can efficiently create training materials for their employees worldwide, ensuring consistency and clarity.

Many of these services offer simple API access. Here’s a quick pseudo-code example of what that might look like:

import video_localizer_api

# Configure the API client

video_localizer_api.api_key = "YOUR_API_KEY"

# Define the localization job

job_config = {

"source_video_url": "https://example.com/my_video.mp4",

"source_language": "en-US",

"target_languages": ["es-ES", "fr-FR", "ja-JP"],

"voice_cloning": True,

"lip_sync": True

}

# Start the localization job and get results

job_id = video_localizer_api.start_job(job_config)

status = video_localizer_api.check_status(job_id)

if status == "complete":

results = video_localizer_api.get_results(job_id)

for lang, url in results.items():

print(f"Localized video for {lang}: {url}")

The Uncanny Valley: Navigating the Challenges of AI Dubbing

As revolutionary as this technology is, it’s not without its pitfalls. We’re still navigating the “uncanny valley,” where things are almost perfect… but not quite.

“AI can replicate the ‘what’ of human speech with incredible accuracy, but the ‘how’—the subtle emotional subtext—remains the key challenge. For now, a human touch is still essential for high-stakes creative work.”

- Emotional Nuance: AI can struggle to convey sarcasm, excitement, or sorrow with the same authenticity as a human voice actor.

- Cultural Context: A direct translation isn’t always the best one. Idioms and cultural references often get lost, requiring human oversight for true localization.

- Lip-Sync Imperfections: While constantly improving, AI lip-sync can sometimes look slightly “off,” which can be distracting for viewers.

- Ethical Concerns: The power of voice cloning technology raises important ethical questions about consent, deepfakes, and misuse.

Beyond the Horizon: The Future of Automated Video Translation

The pace of innovation in this field is breathtaking. The limitations of today are the solved problems of tomorrow. Here’s a glimpse of what’s coming next:

- Real-Time Dubbing: Imagine watching a live international news broadcast or sporting event, dubbed into your language in real-time. This is the holy grail.

- Hyper-Expressive Voices: Future TTS models will be trained on vast datasets of emotional speech, allowing them to generate performances indistinguishable from human actors.

- Visual Translation: AI won’t just change the audio. It will also be able to seamlessly replace on-screen text, signs, and graphics to match the target language.

This technology is rapidly moving from a niche tool to a standard part of the content creator’s toolkit. It’s not about replacing human creativity but augmenting it, allowing stories to reach the global audience they deserve. For more ideas, see our list of the Top AI Marketing Tools to revolutionize your workflow.

Frequently Asked Questions about AI Video Localization

1. What is AI video localization?

AI video localization is the process of using artificial intelligence to automatically adapt video content for different languages and regions. This includes transcribing audio, translating text, generating new voice tracks (dubbing), and even adjusting lip movements to match the new audio.

2. Is AI voice cloning legal and ethical?

The legality and ethics are complex. Generally, it is legal and ethical if you have explicit consent from the person whose voice is being cloned. Using it to create deepfakes or impersonate someone without permission is illegal and unethical. Always use services with clear terms of service regarding consent.

3. Can AI dubbing replace human voice actors?

For many applications like corporate training, e-learning, and social media content, AI is a fantastic and cost-effective solution. However, for high-end entertainment like feature films, human voice actors are still superior at conveying deep emotional nuance and delivering a captivating performance. For now, AI is more of a powerful tool than a complete replacement.

4. What are the best tools for automated video translation?

The market is evolving quickly! Some popular and powerful tools include HeyGen, Rask.ai, and Argil.ai. For a deeper analysis of the landscape, authoritative sources like 3Play Media offer excellent insights into the pros and cons of different approaches.

“`